AI for Audio Beyond Speech

Artificial Intelligence has transformed how machines understand the world, not just through vision or language but also through sound. The audio aspect of AI is much more than speech, and while speech recognition systems such as Alexa and Siri have become household names, the world of AI has much more to offer. Since AI is now listening to much more than words, it is being used to detect machine malfunctions and even track wildlife in distant forests.

Difference Between Speech-Based AI and Environmental Sound AI

When most people think about AI and audio, they imagine voice assistants, transcription software, or chatbots that all deal with speech. This type of AI is concerned with recognizing and interpreting human language, transforming sound waves into words and meaning.

Environmental sound AI is however concerned with non-speech sounds which include the hum of a car engine, bird chirping, the sound of broken glass or the sound of a fire alarm. These sounds hold vital information about the environment or an event.

Aspect | Speech-Based AI | Environmental Sound AI |

Focus | Human voices, words, and language | Natural or mechanical sounds |

Goal | Understand spoken content | Detect, classify, or predict events |

Examples | Alexa, Google Assistant, Speech-to-Text | Machinery fault detection, wildlife monitoring, disaster alerts |

Where speech-based AI uses language characteristics/features such as phonemes and language models, environmental sound AI uses acoustic patterns and frequency characteristics, which depict physical events.

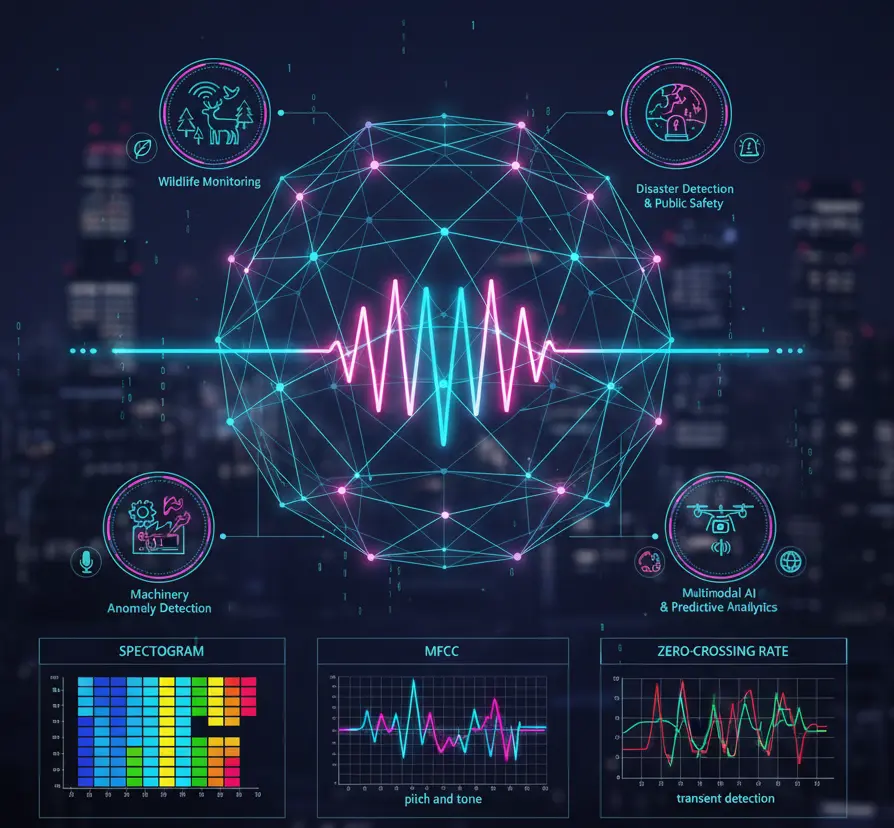

Use Cases: Beyond Human Speech

Anomaly Detection in Machines

AI audio systems are now used by factories and industries to identify the faults in machines early, based on listening to the change in the sound patterns. As an example, when a motor begins to produce unusual grinding noise, an AI model will be able to identify the issue and even prevent the motor from breaking down. This facilitates predictive maintenance and saves on costs and downtimes.

Wildlife Monitoring

AI models, which analyze sound data in forests and oceans, are used in ecology to recognize animal species based on their sounds. It gives the scientists an opportunity to monitor biodiversity, migration, and detect any illegal activities like poaching or deforestation. AI is used to keep a quiet and constant check on the health of the planet.

Disaster Detection and Public Safety

AI systems can detect environmental cues such as earthquakes, floods, or wildfires by recognizing characteristic sound patterns like rumbling or cracking. The sensors on smarter cities can activate an alert based on abnormal ambient sounds and speed up the response of authorities in case of an emergency.

Features for Sound Analysis: MFCC, Spectrograms, and More

To be able to interpret sound the AI needs to process raw audio into meaningful features. Three techniques are most frequently used and they are:

- MFCC (Mel-Frequency Cepstral Coefficients)

MFCCs capture the short-term power spectrum of sound and help the system understand pitch and tone. They are essential for understanding the characteristics of audio signals in both speech and environmental analysis. - Spectrograms

A spectrogram is a visual representation of sound displaying the time-frequency plot of a time-varying audio signal, namely the frequency content of an audio signal versus time. Convolutional Neural Networks are frequently used by AI models to process spectrograms such as images. - Chroma and Zero-Crossing Rate

These additional features help detect musical tones and identify sudden changes in sound patterns. They can be applied in music classification or noise monitoring in industries.

In simple terms, sound is converted into visual or numerical data first before it can be successfully interpreted by AI models.

Example Projects and Datasets

Here are some real-world datasets and project ideas that drive research and development in audio-based AI:

- UrbanSound8K – Contains over 8,000 labeled urban sounds such as sirens, drilling, and engine noise, used for city noise classification.

- ESC-50 – Includes 2,000 environmental recordings across 50 classes such as rain, insects, and barking dogs.

- AudioSet by Google – A massive dataset with over two million labeled YouTube clips covering everything from laughter to explosions.

- Project Idea – Build a smart IoT device that listens for abnormal factory sounds using a trained neural network on MFCC features. The device could instantly alert technicians when it detects an unusual pattern.

The Future: Multimodal AI (Audio, Video, and Text)

Multimodal intelligence is the future of audio AI as the systems can understand sound, vision, and language in greater detail.

Consider a drone-based disaster monitoring solution that is capable of not only observing the fire smoke using its camera but also hearing the fire crackling and reading weather updates to make precise real-time predictions. Or smart surveillance systems which use a combination of sound detection such as a scream or crash with visual confirmation to alert authorities quicker.

The combination of audio and other types of data turns AI systems contextual, more precise, and much more effective.

Conclusion

AI for audio beyond speech is quietly reshaping industries. Sound is now a valuable source of intelligence in terms of ensuring reliability of machines, as well as safeguarding ecosystems and saving lives. As AI advances, its capabilities to listen, understand and act on non-speech audio will become available to reveal new levels of automation and awareness within our interconnected world.

In a future where machines can both see and hear, AI will not just talk back, it will truly listen.