Few-Shot and Zero-Shot Learning: Redefining How Machines Learn

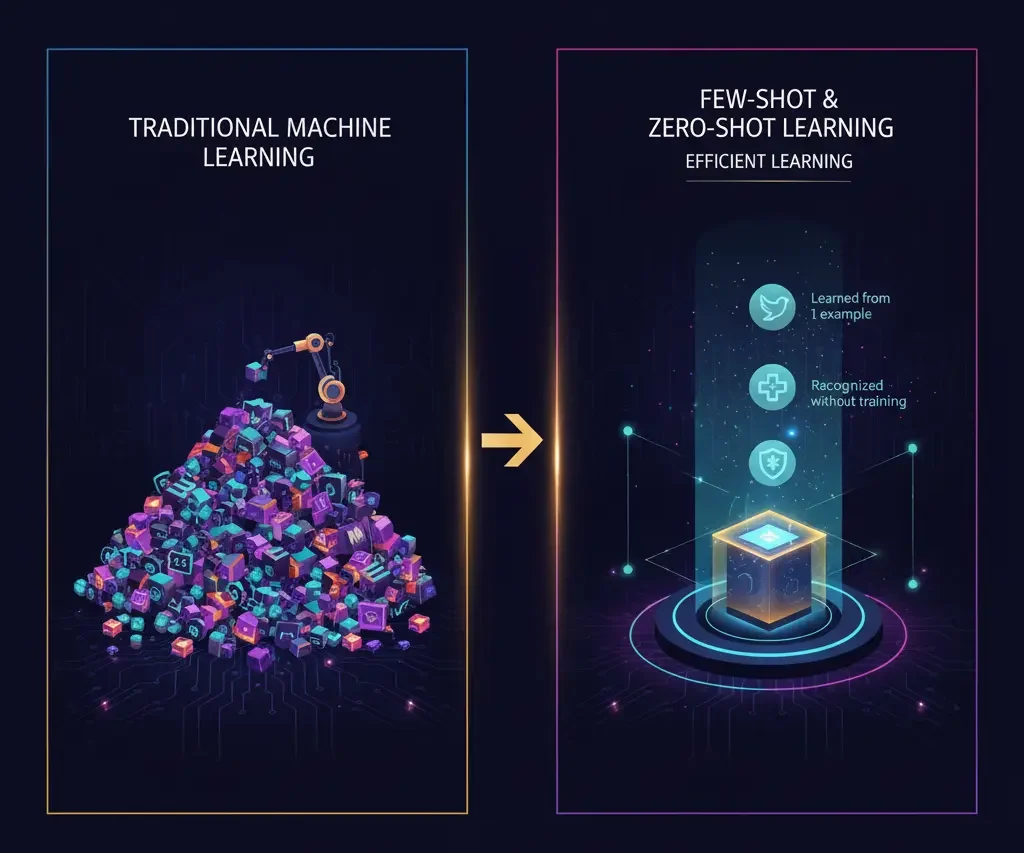

Artificial Intelligence has made huge strides in the past decade, but one major limitation remains: its dependency on massive amounts of labeled data. Traditional machine learning models need thousands or even millions of examples to perform well. However, in the real world, such large labeled datasets are often unavailable, expensive, or time-consuming to create. This is where Few-Shot and Zero-Shot Learning come in, allowing machines to learn new concepts with minimal or no data.

What Are Few-Shot and Zero-Shot Learning?

To understand these approaches, let’s first look at how traditional machine learning works.

Traditional Machine Learning

Traditional ML models are trained on large datasets containing many examples of each class. For example, to recognize animals, a model might need thousands of cat and dog images. If a new animal type is introduced, the model must be retrained from scratch with new labeled data.

Few-Shot Learning (FSL)

Few-Shot Learning enables models to recognize new categories or tasks after seeing only a few examples. It is inspired by how humans learn, we do not need to see hundreds of examples to identify a new object. Few-shot learning uses meta-learning (learning how to learn) so the model can generalize from very limited data.

Zero-Shot Learning (ZSL)

Zero-Shot Learning takes it a step further. These models can identify objects or perform tasks without ever having seen direct examples. They rely on semantic information, understanding relationships between known and unknown concepts. For instance, if a model knows what a “horse” and a “zebra” are, it can recognize a “striped horse” as a zebra, even if it has never seen one before.

Comparison: Traditional vs. Few-Shot vs. Zero-Shot Learning

Feature | Traditional Machine Learning | Few-Shot Learning | Zero-Shot Learning |

Data Requirement | Requires thousands of labeled samples | Needs only a few examples | Requires no examples for unseen classes |

Learning Method | Task-specific learning | Meta-learning (learning to learn) | Uses semantic knowledge and transfer learning |

Adaptability | Poor for new or unseen tasks | Good adaptability with few samples | Excellent generalization to unseen tasks |

Example | Classifying cats and dogs | Learning to recognize new animals with 5 images | Identifying objects described in words only |

Use Case | Image classification, spam detection | Personalized AI, medical diagnosis | Vision-language models, intelligent search |

Why Few-Shot and Zero-Shot Learning Matter

In real-world applications, labeled data is not always easy to obtain. Few-shot and zero-shot learning make AI faster, cheaper, and more adaptable, opening doors for innovation across industries.

- Healthcare: Detect rare diseases with limited patient images.

- Finance: Identify new fraud patterns without retraining.

- Cybersecurity: Recognize unseen types of attacks or anomalies.

- Personalization: Adapt instantly to user behavior or preferences.

These approaches make AI systems more flexible and able to perform effectively even in dynamic or data-scarce environments.

Key Models and Technologies

GPT (Generative Pretrained Transformer)

Models like GPT-4 and GPT-5 are capable of zero-shot and few-shot reasoning. They can perform translation, summarization, coding, and question-answering without specific retraining, simply based on prompts.

CLIP (Contrastive Language–Image Pretraining)

Developed by OpenAI, CLIP learns from text and image pairs. It can recognize unseen images based on textual descriptions for example, identifying a “snow leopard playing guitar” even if it has never seen such an image.

DALL·E

Another OpenAI model, DALL·E, can generate creative images from text prompts. It demonstrates strong zero-shot understanding by combining unrelated concepts, such as “a teapot in the shape of a castle.”

Real-World Applications

Few-shot and zero-shot learning are already transforming industries:

- Chatbots and Virtual Assistants: Understand new user intents with minimal training data.

- Robotics: Learn new actions or environments from a few demonstrations.

- Search Engines: Handle complex or rare queries more intelligently.

- Creative AI Tools: Generate art, design, or content from textual instructions.

These models enable businesses to deploy AI faster, adapt to new trends, and offer smarter automation without huge data collection costs.

Challenges and Limitations

While few-shot and zero-shot learning bring powerful capabilities, they are not without challenges:

- Bias and Fairness: Models trained on large internet datasets may carry hidden biases.

- Generalization Errors: Sometimes predictions can be too broad or inaccurate.

- Explainability: Understanding how the model infers new knowledge remains complex.

- Domain Shift: Models may struggle when facing data from entirely new environments.

Researchers continue to refine these systems to improve accuracy, reduce bias, and enhance interpretability.

The Future of Data-Efficient AI

Few-shot and zero-shot learning represent a major step toward human-like learning in AI. Instead of relying purely on data quantity, the focus is shifting toward data efficiency and reasoning ability. Future systems will combine multiple modalities like text, vision, and audio to understand and interact with the world more intelligently.

As this technology advances, AI will become not just powerful, but also accessible, adaptable, and sustainable, making it possible for even small businesses to benefit from intelligent automation without massive datasets.