Adversarial Attacks on AI: The Hidden Threat Behind Intelligent Systems

Artificial Intelligence has become the backbone of modern technology, from self-driving cars and facial recognition systems to voice assistants and medical diagnosis tools. However, with great power comes great vulnerability. A growing concern in the AI world is adversarial attacks, where tiny, often invisible changes to input data can fool even the most advanced AI systems into making wrong decisions.

What Are Adversarial Attacks?

An adversarial attack is a deliberate attempt to trick an AI or machine learning model by slightly altering the input data. These modifications are usually imperceptible to humans but can completely mislead an AI model.

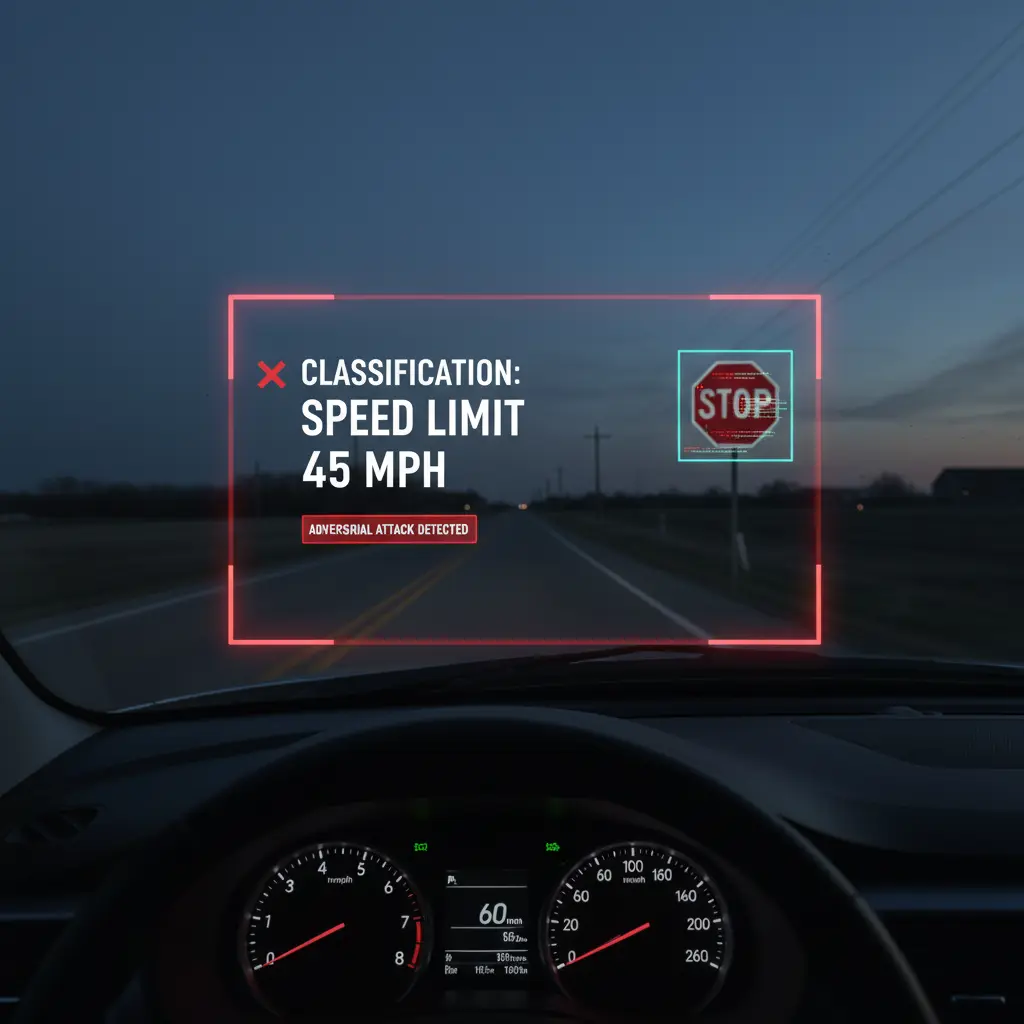

For example, by adding a few pixels of noise to a stop sign, attackers can make a self-driving car’s vision system interpret it as a speed limit sign. To the human eye, the image looks unchanged, but to the AI, the meaning is completely different.

In simple terms, adversarial attacks exploit how AI models interpret data, revealing that they do not always understand content in the same way humans do.

Types of Adversarial Attacks

Adversarial attacks come in various forms depending on the data type and model they target. Here are some of the most common categories:

1. Image Attacks

Small changes in pixel values can drastically affect image classification. For example:

- Modifying just a few pixels in an image can cause a model to misidentify a cat as a dog.

- Adding stickers or graffiti to traffic signs can fool autonomous vehicle systems.

2. Audio Attacks

AI systems that process sound, such as voice assistants or speech recognition tools, can also be deceived.

- Attackers can embed hidden commands in audio clips that humans cannot hear but are understood by AI.

- A malicious command like “open the door” could be buried in background music and still recognized by an AI speaker.

3. Text Attacks

Even Natural Language Processing (NLP) systems are not safe.

- Subtle changes such as replacing a few words or altering spelling can change a sentiment analysis result from positive to negative.

- Fake news generators can exploit these weaknesses to bypass moderation filters.

These examples highlight how adversarial manipulation can impact various AI domains, from computer vision to natural language understanding.

Why Adversarial Attacks Are Dangerous

The danger of adversarial attacks lies in their real-world consequences. In high-stakes applications, even a small error can lead to massive losses or risks to human life.

- Autonomous Vehicles: If a car misinterprets a stop sign, it can cause accidents.

- Healthcare: A medical AI system might misclassify a tumor as benign due to manipulated imaging data.

- Security Systems: Facial recognition can be tricked into granting access to unauthorized individuals using adversarially modified images.

These attacks expose how fragile AI models can be and how easily they can be manipulated if not properly secured.

Defense Mechanisms and Robustness Research

Researchers around the world are actively developing methods to defend against adversarial attacks and make AI systems more robust. Some key approaches include:

1. Adversarial Training

This method involves exposing the model to adversarial examples during training. The model learns to recognize and resist these attacks, improving its resilience.

2. Input Sanitization

Filtering and preprocessing input data before feeding it into a model can help remove potential adversarial noise.

3. Defensive Distillation

This technique trains a secondary model to smooth the decision boundaries of the primary model, making it less sensitive to small input perturbations.

4. Detection Systems

AI models can be paired with detectors that monitor for suspicious inputs or behaviors. If an anomaly is detected, the system can flag or reject the input.

While no defense is perfect, combining these techniques significantly increases the difficulty of launching a successful attack.

Famous Adversarial Examples

Several well-known incidents have demonstrated the potential of adversarial attacks:

- Autonomous Driving: Researchers from MIT and UC Berkeley showed that strategically placed stickers on road signs could cause self-driving cars to misinterpret them.

- Image Classification: Google researchers found that adding invisible noise to an image of a panda could make a deep learning model classify it as a gibbon with high confidence.

- Voice Commands: Security experts have embedded inaudible commands into songs that voice assistants like Alexa and Google Assistant could interpret as legitimate instructions.

These examples prove that adversarial attacks are not just theoretical; they are practical and potentially dangerous in the real world.

The Future of AI Robustness

As AI becomes more integrated into critical infrastructure, the importance of building secure and explainable models will continue to grow. Future AI systems will need:

- Explainability: Models that can explain their decisions are easier to audit for manipulation.

- Certified Robustness: Mathematical guarantees that a model’s predictions will remain stable under small input changes.

- Continuous Learning: AI that evolves over time, adapting to new attack patterns and data.

Collaboration between academia, industry, and cybersecurity experts is essential to safeguard the next generation of AI technologies.

Conclusion

Adversarial attacks remind us that artificial intelligence, while powerful, is not infallible. The same algorithms that empower automation and innovation can be exploited if left unprotected. Understanding and mitigating adversarial threats is crucial for ensuring the reliability, safety, and trustworthiness of AI systems.

In the journey toward truly intelligent and secure AI, resilience is not an option—it is a necessity. By investing in robust model design, active monitoring, and continuous research, we can build a future where AI is not only smart but also safe.